The IP name signifies the machine name , the physical machine that is being referred. A cluster is nothing but a group of machines.

It is a unique identifier created while familiarizing the controls. During familiarisation, a configuration is automatically created at the jiffy server, with the format being _Cluster_Name

The componentisation functionality of Jiffy enables you to achieve extreme re-usability. You can create your own re-usable components and share it across projects reducing the time to automate further and further helps to standardize any common procedures.

JIFFY users are provided with the functionality where frequently used Jiffy steps (a group of jiffy nodes/ automation steps) that performs a subtask can be made as a component and can be reused across tasks/processes. This avoids duplication of effort and data as well as reduces maintainability. Any changes to the subtask can be directly done in the bundled component which will automatically change all the tasks using that bundled component.

Please refer to tour section on Resuability and Maintenance < give link >

Ideally, we will need to first identify and qualify the process for RPA before we embark into any automation activity. This would involve defining parameters like efficiency, accuracy, quality, cost and the possible FTE benefit when compared to the existing process

When the above has been ascertained, next steps would be to understand what kind of automations will be required for it - UI, DB, Rule Based, Cognitive and then define the phased approach which would start with a Discovery Phase, detailed Scope definition, define Exceptions, possible Risks and mitigation strategies and a details implementation and testing phase before it is deployed into production.

Please speak to our RPA experts to get further insights into defining this RPA journey for you

Make sure you log in with the same credentials that you used while configuring the RPASS bot.

First, check if your spark process is running or not, if not open jiffy server and start the Spark using the below command.

/opt/docube/spark-2.3.2-bin-hadoop2.7/sbin/start-all.sh.

[docubeapp-usr@docubedev sbin]$ ps -ef | grep spark

docubea+ 53539 1 10 08:23 pts/1 00:00:03 /opt/jdk1.8.0_131/jre/bin/java -cp /opt/docube/spark-2.3.2-bin-hadoop2.7/conf/:/opt/docube/spark-2.3.2-bin-hadoop2.7/jars/* -Xmx1g org.apache.spark.deploy.master.Master --host docubedev --port 7077 --webui-port 8080

docubea+ 53660 1 18 08:23 ? 00:00:03 /opt/jdk1.8.0_131/jre/bin/java -cp /opt/docube/spark-2.3.2-bin-hadoop2.7/conf/:/opt/docube/spark-2.3.2-bin-hadoop2.7/jars/* -Xmx1g org.apache.spark.deploy.worker.Worker --webui-port 8081 spark://docubedev:7077

docubea+ 53744 53003 0 08:23 pts/1 00:00:00 grep --color=auto spark

If you want to create dynamic page numbers in task design, give the number of pages as the maximum number of pages , and even if there are less pages it will stop at that particular end page.

No. The previously created tasks run as usual.

The BOT can execute only one task at a time, but once the scheduled tasks are executed and the BOT is available(free), it can be used to run other tasks.

When modifications are made in a scheduled task, the changes will reflect in the next run of the task.

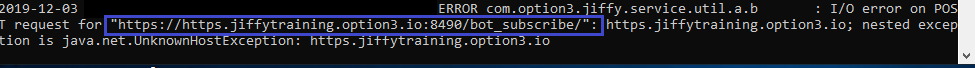

Do the below procedure if the following error message is displayed:

Check the Jiffy properties file.

- Go to C:\jiffyservice\jiffy.properties.

- Check the URL value. It should be : https://jiffytraining.option3.io:8484

- Ensure that the URL does not contain two "https"

- Save the jiffy properties file

- Restart the bot.

Since the command prompt is not a desktop application, while executing commands in the command prompt, Jiffy does not wait for the commands to finish execution by default. So, after a command is input into the command prompt, it moves to the next step almost instantly. The following steps can be followed to finish the execution of commands in the command prompt before moving to the next step.

- Store the commands in a .bat file.

- Write a custom expression in python to do the following:

- Read the commands from the .bat file.

- Input the commands into the command prompt.

- Wait for the commands to get executed.

For Example:

import subprocess

import os

subprocess.check_output ([file_path])

Where "[file_path]" is the path of the path of the .bat file.

Click here to know more about custom expressions.

- Run the .bat file by calling it through a custom expression using an Expression node so that all the commands in the .bat file get executed. This will ensure that all the commands in the .bat file are executed first and then the task execution continues.